| age | sex | years | ppe_access |

|---|---|---|---|

| 34 | Male | 2 | 1 |

| 32 | Female | 3 | 1 |

| 32 | Female | 1 | 1 |

| 40 | Male | 4 | 1 |

| 32 | Male | 10 | 1 |

Logistic regression

Binomial responses + overdispersion

Feb 07, 2024

Announcements

HW 02 due TODAY at 11:59pm

Project 01

presentations in class Wed, Feb 14

write up due Thu, Feb 15 at noon

Learning goals

Visualizations for logistic regression

Fit and interpret logistic regression model for binomial response variable

Explore diagnostics for logistic regression

Summarize GLMs for independent observations

Logistic regression

Bernoulli + Binomial random variables

Logistic regression is used to analyze data with two types of responses:

- Bernoulli (Binary): These responses take on two values success

- Binomial: Number of successes in a Bernoulli process,

In both instances, the goal is to model

Logistic regression model

- The response variable,

- Use the model to calculate the probability of success

- When the response is a Bernoulli random variable, the probabilities can be used to classify each observation as a success or failure

Interpreting coefficients

COVID-19 infection prevention practices at food establishments

Researchers at Wollo Univeristy in Ethiopia conducted a study in July and August 2020 to understand factors associated with good COVID-19 infection prevention practices at food establishments. Their study is published in Andualem et al. (2022) .

They were particularly interested in the understanding implementation of prevention practices at food establishments, given the workers’ increased risk due to daily contact with customers.

Results

Interpretation

The (adjusted) odds ratio for availability of COVID-19 infection prevention guidelines is 2.68 with 95% CI (1.52, 4.75).

The odds ratio between workers at a restaurant with such guidelines and those at a restaurant without the guidelines is 2.68, after adjusting for the other factors.

Interpretation: The odds a worker at a restaurant with COVID-19 infection prevention guidelines uses good infection prevention practices is 2.68 times the odds of a worker at a restaurant without the guidelines, holding all other factors constant.

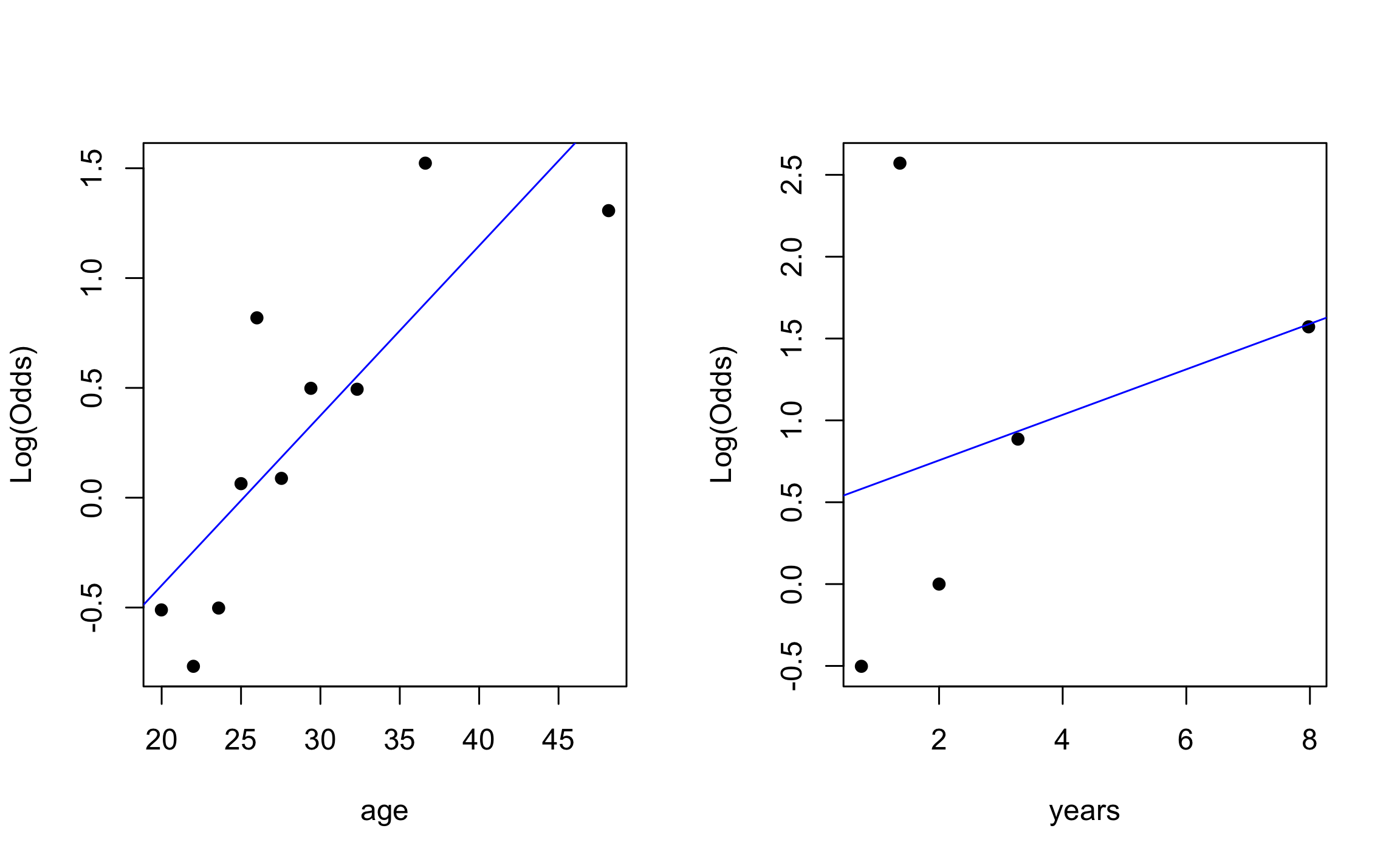

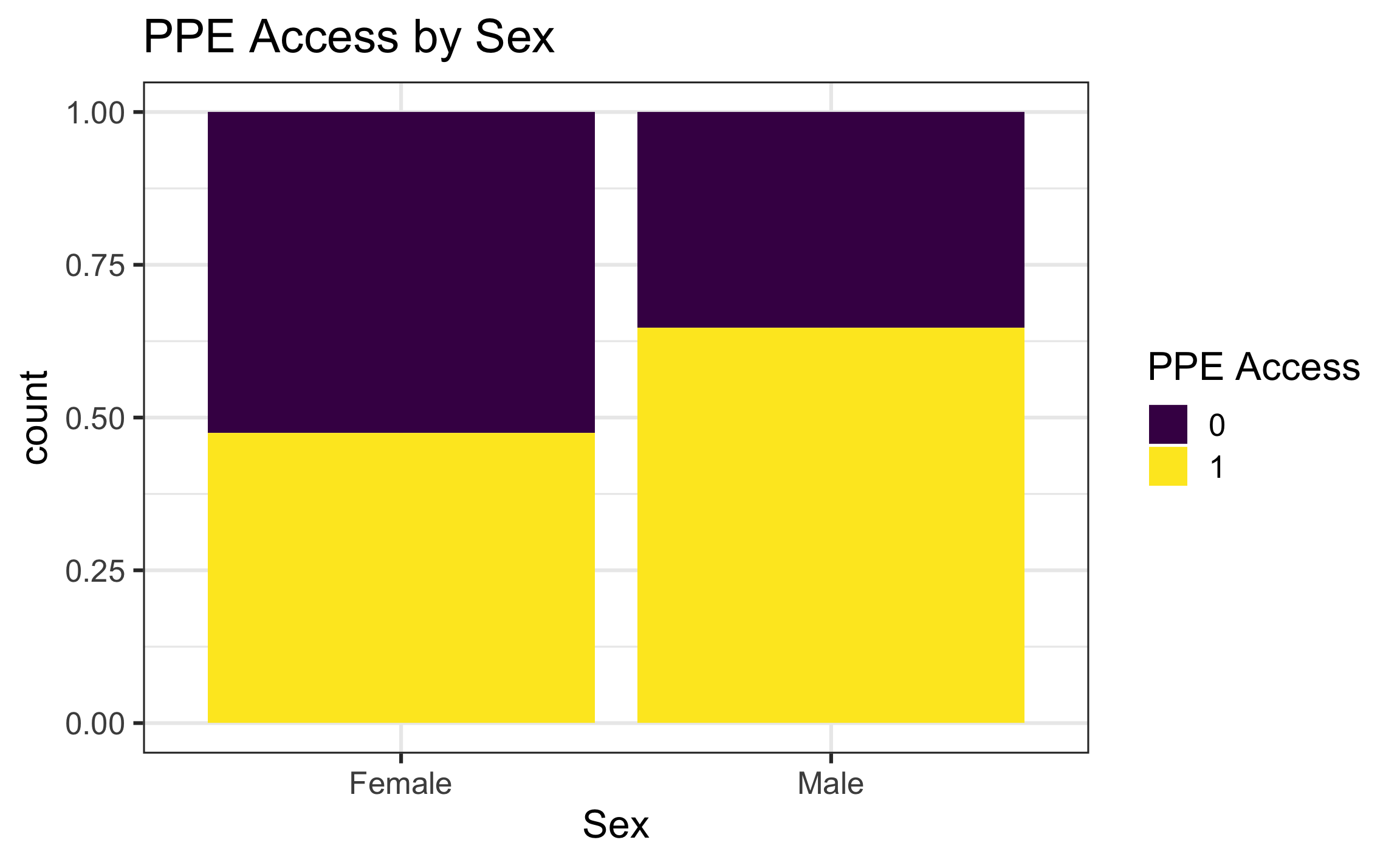

Visualizations for logistic regression

Access to personal protective equipment

We will use the data from Andualem et al. (2022) to explore the association between age, sex, years of service, and whether someone works at a food establishment with access to personal protective equipment (PPE) as of August 2020. We will use access to PPE as a proxy for wearing PPE.

EDA for binary response

EDA for binary response

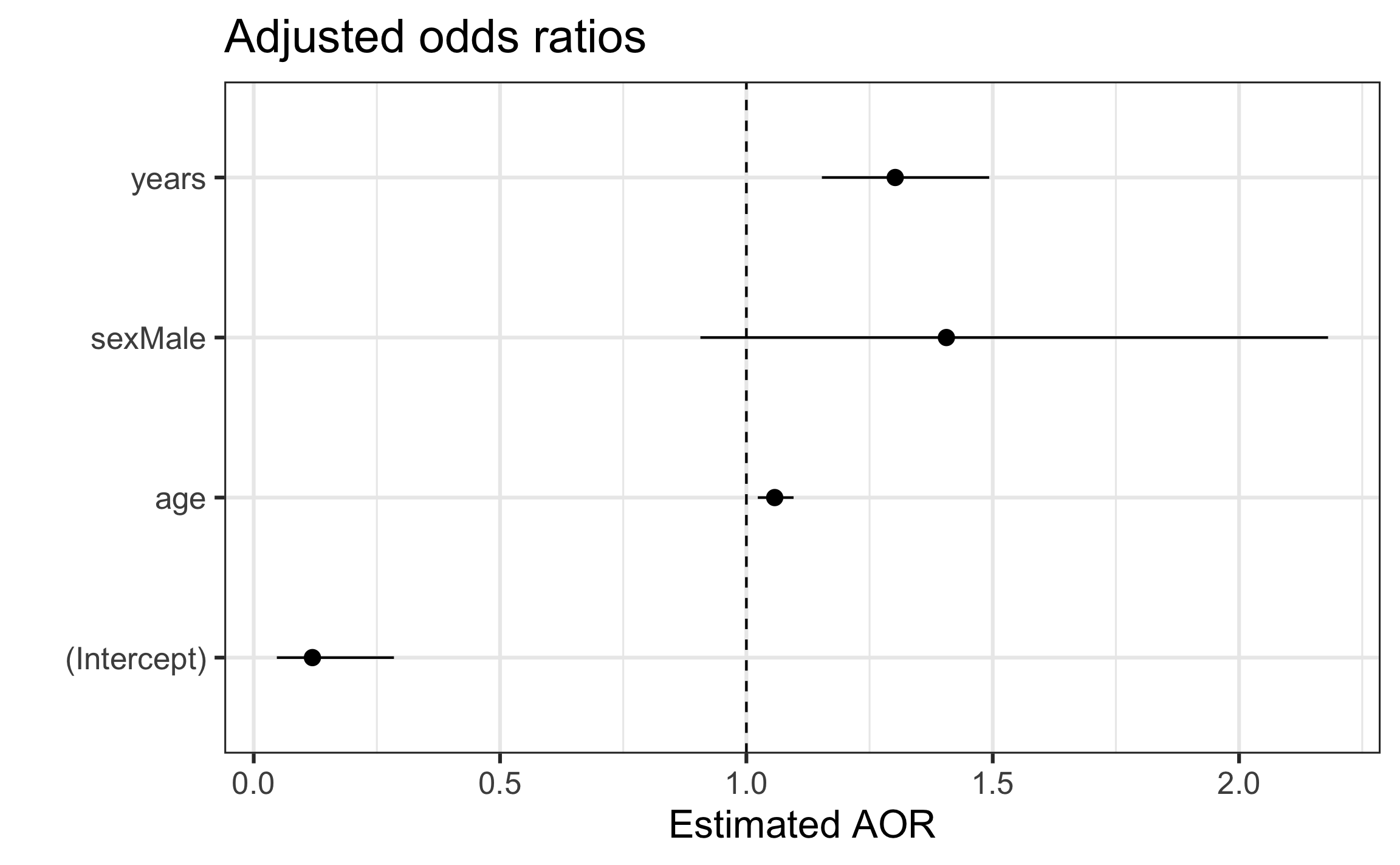

Model results

ppe_model <- glm(factor(ppe_access) ~ age + sex + years,

data = covid_df, family = binomial)

tidy(ppe_model, conf.int = TRUE) |>

kable(digits = 3)| term | estimate | std.error | statistic | p.value | conf.low | conf.high |

|---|---|---|---|---|---|---|

| (Intercept) | -2.127 | 0.458 | -4.641 | 0.000 | -3.058 | -1.257 |

| age | 0.056 | 0.017 | 3.210 | 0.001 | 0.023 | 0.091 |

| sexMale | 0.341 | 0.224 | 1.524 | 0.128 | -0.098 | 0.780 |

| years | 0.264 | 0.066 | 4.010 | 0.000 | 0.143 | 0.401 |

Visualizing coefficient estimates

Logistic regression for binomial response variable

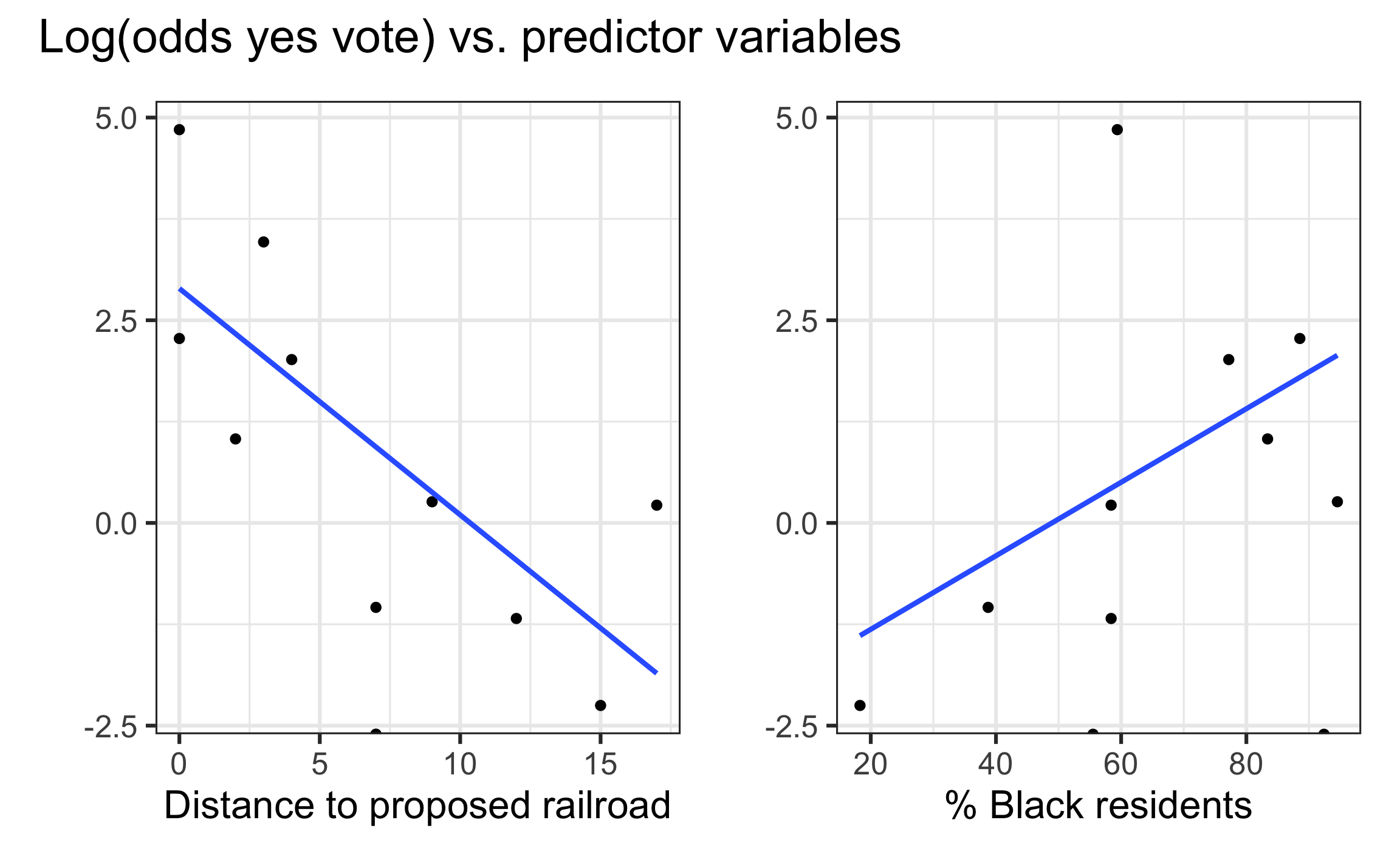

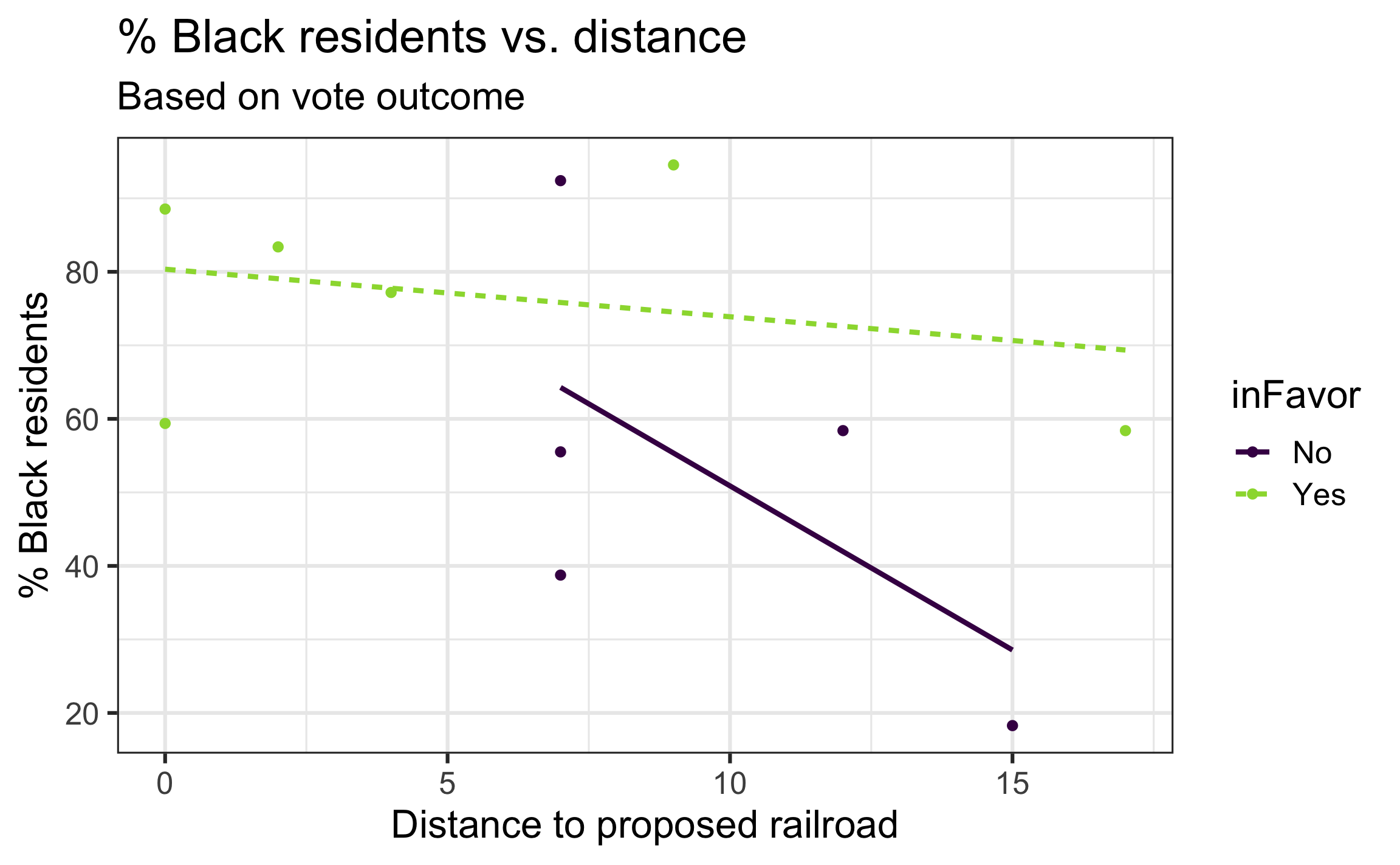

Data: Supporting railroads in the 1870s

The data set RR_Data_Hale.csv contains information on support for referendums related to railroad subsidies for 11 communities in Hale County, Alabama in the 1870s. The data were originally collected from the US Census by historian Michael Fitzgerald and analyzed as part of a thesis project by a student at St. Olaf College. The variables in the data are

pctBlack: percentage of Black residents in the countydistance: distance the proposed railroad is from the community (in miles)YesVotes: number of “yes” votes in favor of the proposed railroad lineNumVotes: number of votes cast in the election

Primary question: Was voting on the railroad referendum related to the distance from the proposed railroad line, after adjusting for the demographics of a county?

The data

| County | popBlack | popWhite | popTotal | pctBlack | distance | YesVotes | NumVotes |

|---|---|---|---|---|---|---|---|

| Carthage | 841 | 599 | 1440 | 58.40 | 17 | 61 | 110 |

| Cederville | 1774 | 146 | 1920 | 92.40 | 7 | 0 | 15 |

| Five Mile Creek | 140 | 626 | 766 | 18.28 | 15 | 4 | 42 |

| Greensboro | 1425 | 975 | 2400 | 59.38 | 0 | 1790 | 1804 |

| Harrison | 443 | 355 | 798 | 55.51 | 7 | 0 | 15 |

Exploratory data analysis

Exploratory data analysis

Check for potential multicollinearity and interaction effect.

Model

Let

Likelihood

Use IRLS to find

Model in R

rr_model <- glm(cbind(YesVotes, NumVotes - YesVotes) ~ distance + pctBlack,

data = rr, family = binomial)

tidy(rr_model, conf.int = TRUE) |>

kable(digits = 3)| term | estimate | std.error | statistic | p.value | conf.low | conf.high |

|---|---|---|---|---|---|---|

| (Intercept) | 4.222 | 0.297 | 14.217 | 0.000 | 3.644 | 4.809 |

| distance | -0.292 | 0.013 | -22.270 | 0.000 | -0.318 | -0.267 |

| pctBlack | -0.013 | 0.004 | -3.394 | 0.001 | -0.021 | -0.006 |

Application exercise

10:00

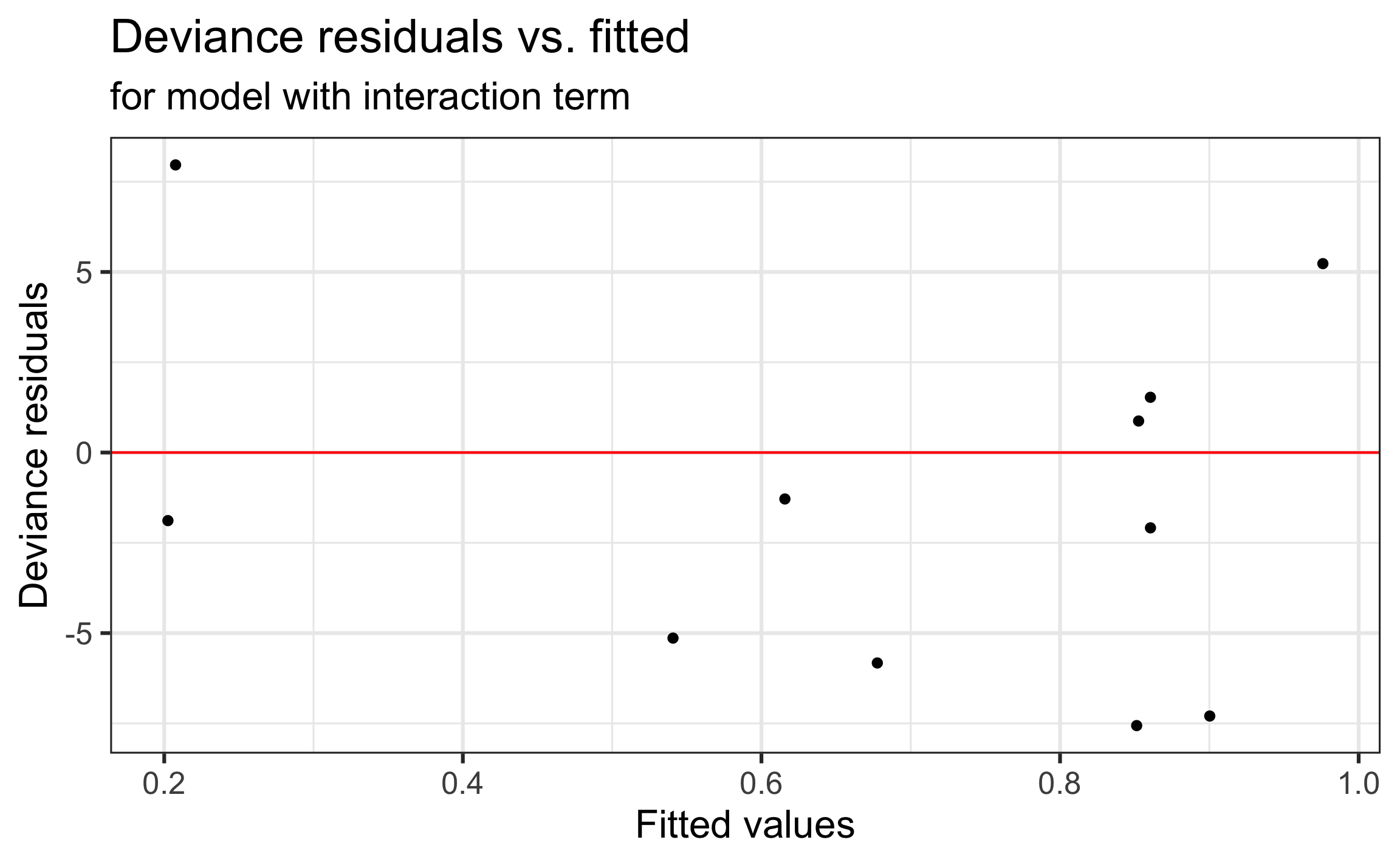

Residuals

Similar to Poisson regression, there are two types of residuals: Pearson and deviance residuals

Pearson residuals

Deviance residuals

Plot of deviance residuals

Goodness of fit

Similar to Poisson regression, the sum of the squared deviance residuals is used to assess goodness of fit.

- When

- Recall

- Recall

- If the model fits, we expect the residual deviance to be approximately what value?

Overdispersion

Adjusting for overdispersion

Overdispersion occurs when there is extra-binomial variation, i.e. the variance is greater than what we would expect,

Similar to Poisson regression, we can adjust for overdispersion in the binomial regression model by using a dispersion parameter

- By multiplying by

- By multiplying by

Adjusting for overdispersion

- We adjust for overdispersion using a quasibinomial model.

- “Quasi” reflects the fact we are no longer using a binomial model with true likelihood.

- The standard errors of the coefficients are

- Inference is done using the

- Inference is done using the

Application exercise

06:00

References