Using likelihoods

Jan 22, 2024

Announcements

- HW 01 due Wednesday at 11:59pm

Computing set up

Topics

Review inference for multiple linear regression

Using likelihoods

Inference for multiple linear regression

Data: Kentucky Derby Winners

Today’s data is from the Kentucky Derby, an annual 1.25-mile horse race held at the Churchill Downs race track in Louisville, KY. The data is in the file derbyplus.csv and contains information for races 1896 - 2017.

Response variable

speed: Average speed of the winner in feet per second (ft/s)

Additional variable

winner: Winning horse

Predictor variables

year: Year of the racecondition: Condition of the track (good, fast, slow)starters: Number of horses who raced

Goal: Understand variability in average winner speed based on characteristics of the race.

Data

Candidate models

Model 1: Main effects model (year, condition, starters)

Model 2: Main effects + year

Inference for regression

Use statistical inference to

Evaluate if predictors are statistically significant (not necessarily practically significant!)

Quantify uncertainty in coefficient estimates

Quantify uncertainty in model predictions

If LINE assumptions are met, we can use inferential methods based on mathematical models. If at least linearity and independence are met, we can use simulation-based inference methods.

Inference for regression

When LINE assumptions are met… . . .

Use least squares regression to obtain the estimates for the model coefficients

where

Goal is to use estimated values to draw conclusions about

- Use

- Use

Hypothesis testing for

- State the hypotheses.

- Calculate the test statistic.

Calculate the p-value. The p-value is calculated from a

- State the conclusion in context of the data.

- Reject

- Reject

Confidence interval for

The

where the critical value

General interpretation for the confidence interval [LB, UB]:

We are

Application exercise

Measures of model performance

- Will always increase as predictors are added, so it shouldn’t be used to compare models

Model summary statistics

Use the glance() function to get model summary statistics

| model | r.squared | adj.r.squared | AIC | BIC |

|---|---|---|---|---|

| Model1 | 0.730 | 0.721 | 259.478 | 276.302 |

| Model2 | 0.827 | 0.819 | 207.429 | 227.057 |

| Model3 | 0.751 | 0.738 | 253.584 | 276.016 |

Which model do you choose based on these statistics?

Characteristics of a “good” final model

Model can be used to answer primary research questions

Predictor variables control for important covariates

Potential interactions have been investigated

Variables are centered, as needed, for more meaningful interpretations

Unnecessary terms are removed

Assumptions are met and influential points have been addressed

Model tells a “persuasive story parsimoniously”

Using likelihoods

Learning goals

Describe the concept of a likelihood

Construct the likelihood for a simple model

Define the Maximum Likelihood Estimate (MLE) and use it to answer an analysis question

Identify three ways to calculate or approximate the MLE and apply these methods to find the MLE for a simple model

Use likelihoods to compare models

What is the likelihood?

A likelihood is a function that tells us how likely we are to observe our data for a given parameter value (or values).

Unlike Ordinary Least Squares (OLS), they do not require the responses be independent, identically distributed, and normal (iidN)

They are not the same as probability functions

Probability function vs. likelihood

Probability function: Fixed parameter value(s) + input possible outcomes

Likelihood: Fixed data + input possible parameter values

Data: Fouls in college basketball games

The data set 04-refs.csv includes 30 randomly selected NCAA men’s basketball games played in the 2009 - 2010 season.1

We will focus on the variables foul1, foul2, and foul3, which indicate which team had a foul called them for the 1st, 2nd, and 3rd fouls, respectively.

H: Foul was called on the home teamV: Foul was called on the visiting team

We are focusing on the first three fouls for this analysis, but this could easily be extended to include all fouls in a game.

Fouls in college basketball games

| game | date | visitor | hometeam | foul1 | foul2 | foul3 |

|---|---|---|---|---|---|---|

| 166 | 20100126 | CLEM | BC | V | V | V |

| 224 | 20100224 | DEPAUL | CIN | H | H | V |

| 317 | 20100109 | MARQET | NOVA | H | H | H |

| 214 | 20100228 | MARQET | SETON | V | V | H |

| 278 | 20100128 | SETON | SFL | H | V | V |

We will treat the games as independent in this analysis.

Different likelihood models

Model 1 (Unconditional Model):

- What is the probability the referees call a foul on the home team, assuming foul calls within a game are independent?

Model 2 (Conditional Model):

Is there a tendency for the referees to call more fouls on the visiting team or home team?

Is there a tendency for referees to call a foul on the team that already has more fouls?

Ultimately we want to decide which model is better.

Exploratory data analysis

Model 1: Unconditional model

What is the probability the referees call a foul on the home team, assuming foul calls within a game are independent?

Likelihood

Let

Example

For a single game where the first three fouls are

Model 1: Likelihood contribution

| Foul 1 | Foul 2 | Foul 3 | n | Likelihood contribution |

|---|---|---|---|---|

| H | H | H | 3 | |

| H | H | V | 2 | |

| H | V | H | 3 | |

| H | V | V | 7 | A |

| V | H | H | 7 | B |

| V | H | V | 1 | |

| V | V | H | 5 | |

| V | V | V | 2 |

Fill in A and B.

02:00

Model 1: Likelihood function

Because the observations (the games) are independent, the likelihood is

We will use this function to find the maximum likelihood estimate (MLE). The MLE is the value between 0 and 1 where we are most likely to see the observed data.

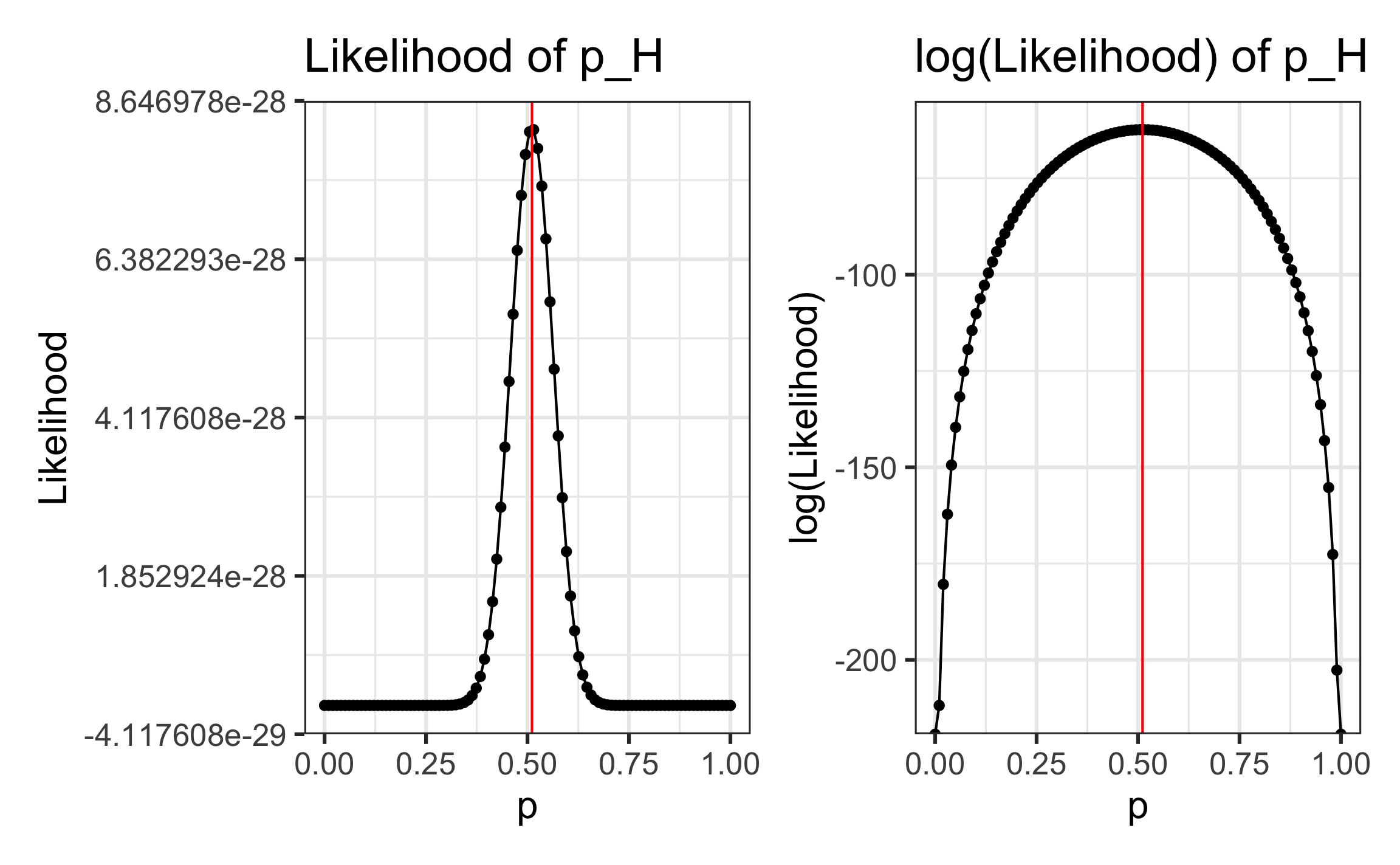

Visualizing the likelihood

What is your best guess for the MLE, $\hat{p}_H$?

- 0.489

- 0.500

- 0.511

- 0.556

Finding the maximum likelihood estimate

There are three primary ways to find the MLE

✅ Approximate using a graph

✅ Numerical approximation

✅ Using calculus

Approximate MLE from a graph

Find the MLE using numerical approximation

Specify a finite set of possible values the for

Find MLE using calculus

Find the MLE by taking the first derivative of the likelihood function.

This can be tricky because of the Product Rule, so we can maximize the log(Likelihood) instead. The same value maximizes the likelihood and log(Likelihood)

Find MLE using calculus

Find MLE using calculus

😐

Model 2: Conditional model

Is there a tendency for referees to call more fouls on the visiting team or home team?

Is there a tendency for referees to call a foul on the team that already has more fouls?

Model 2: Conditional model

Now let’s assume fouls are not independent within each game. We will specify this dependence using conditional probabilities.

- Conditional probability:

Define new parameters:

Model 2: Likelihood contributions

| Foul 1 | Foul 2 | Foul 3 | n | Likelihood contribution |

|---|---|---|---|---|

| H | H | H | 3 | |

| H | H | V | 2 | |

| H | V | H | 3 | |

| H | V | V | 7 | A |

| V | H | H | 7 | B |

| V | H | V | 1 | |

| V | V | H | 5 | |

| V | V | V | 2 |

Fill in A and B.

Likelihood function

(Note: The exponents sum to 90, the total number of fouls in the data)

If fouls within a game are independent, how would you expect

They are all approximately equal.

UPDATE THE CHOICES!

If there is a tendency for referees to call a foul on the team that already has more fouls, how would you expect

They are approximately equal.

Next time

Using likelihoods to compare models

Chapter 3: Distribution theory

References